17-Concentration_Inequalities_and_the_Laws_of_Large_Numbers

在阅读下面的内容前,您可能需要先修读 微积分 - 极限 部分内容以便更好理解下面的讲解

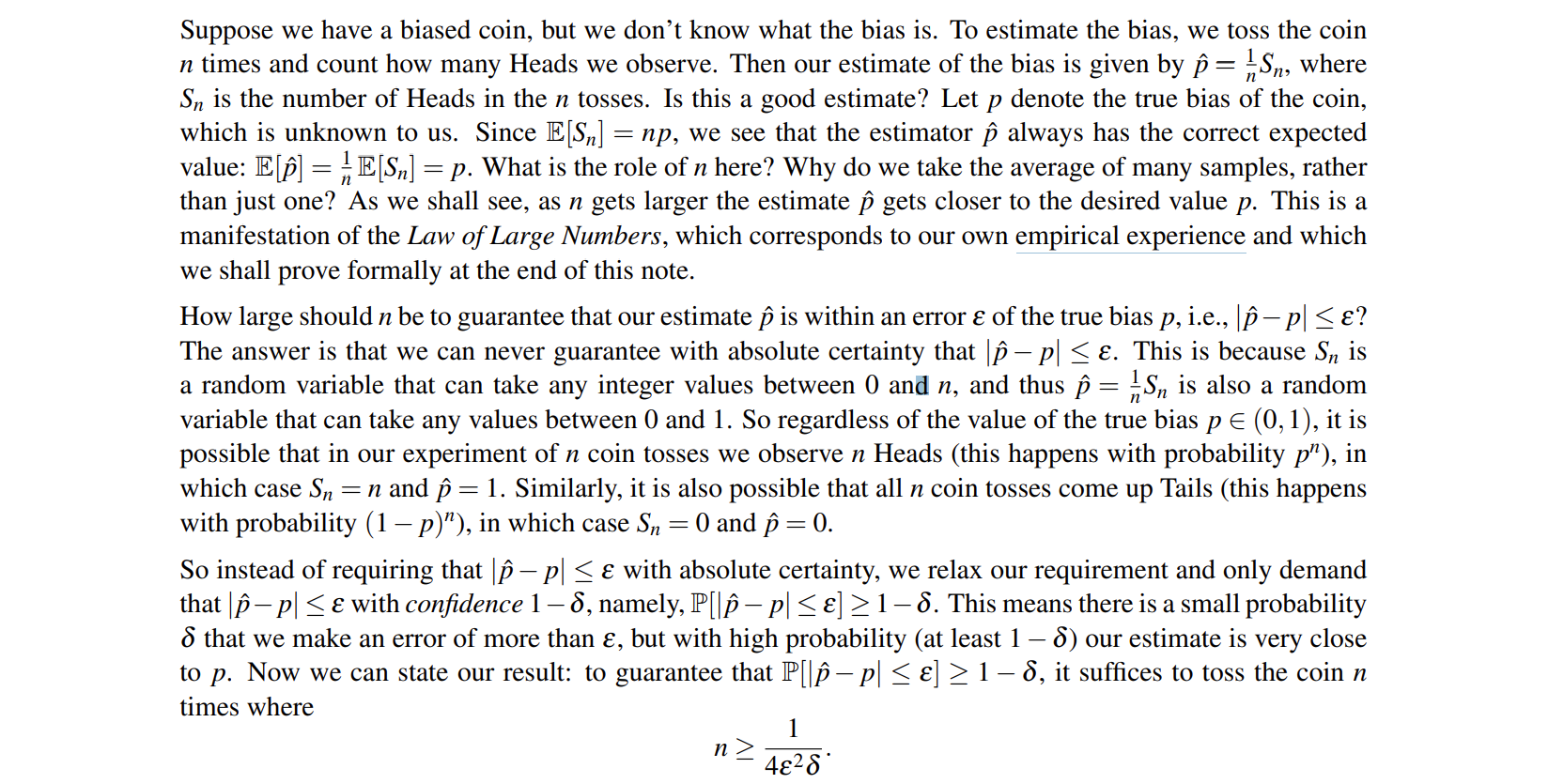

我们有时说一个东西的概率为 p ,但在 empirical experience(实践实验,与理论推理相对应) 中,我们要进行多少次才有足够的把握让实验概率

不难看出,这与微积分中证明极限存在时取 n 足够大的情况是一样的。

对于上边最后的结论,我们下面将一步一步进行证明。

I Markov’s Inequality(马尔可夫不等式)

(Markov’s Inequality). For a nonnegative random variable X (i.e., X(ω) ≥ 0 for all ω ∈ Ω) with finite mean,

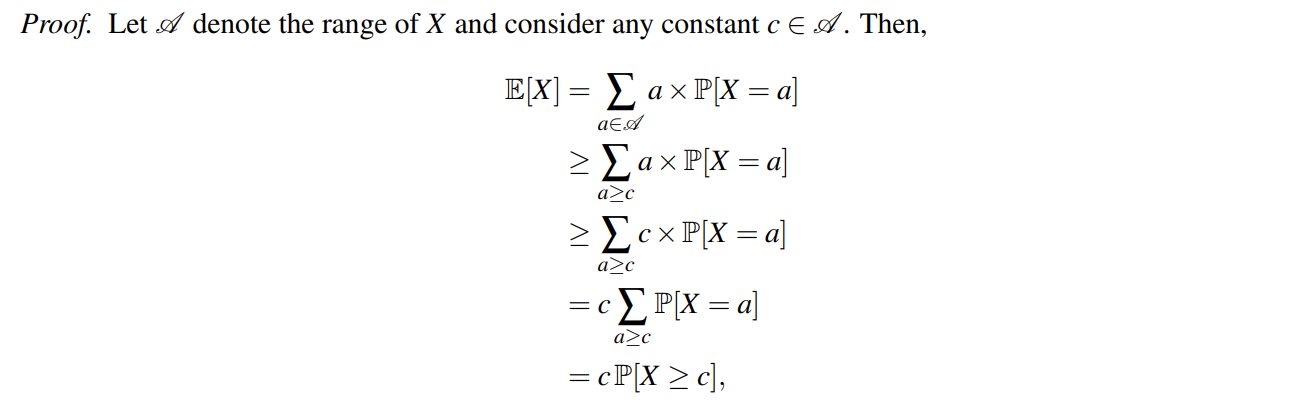

proof is shown below:

Indicator function

II Chebyshev’s Inequality(切比雪夫不等式)

(Chebyshev’s Inequality) For a random variable X with finite expectation E[X] = µ,

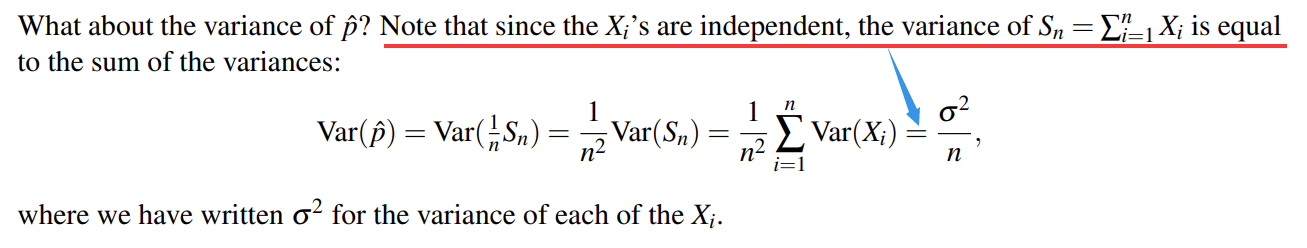

The proof of Chebyshev's Inequality is easy since we just need to take

using Markov’s Inequality,so we get that

take

which is of great importance.

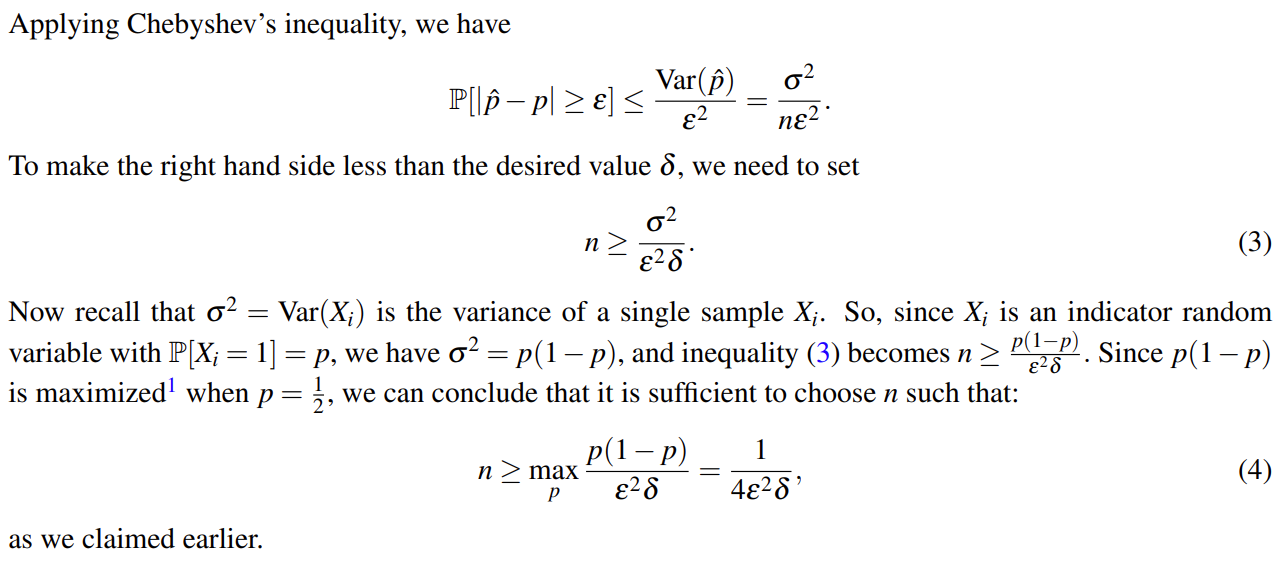

III Estimating the Bias of a Coin

Now, let's solve the problem come up with at the begin.

IV Law of Large Numbers(大数定律)

(Law of Large Numbers) . Let X1,X2,..., be a sequence of i.i.d. (independent and identically distributed) random variables with common finite expectation E[Xi ] = µ for all i. Then, their partial sums Sn = X1 +X2 +···+Xn satisfy

for every ε > 0, however small.

That means if n is big enough,